It is the 28th of July 2016. A crowd is gathered around a marked off area near the entrance of Level 2, COM1. Among them are NUS President, Professor Tan Chorh Chuan, and NUS Provost, Professor Tan Eng Chye.

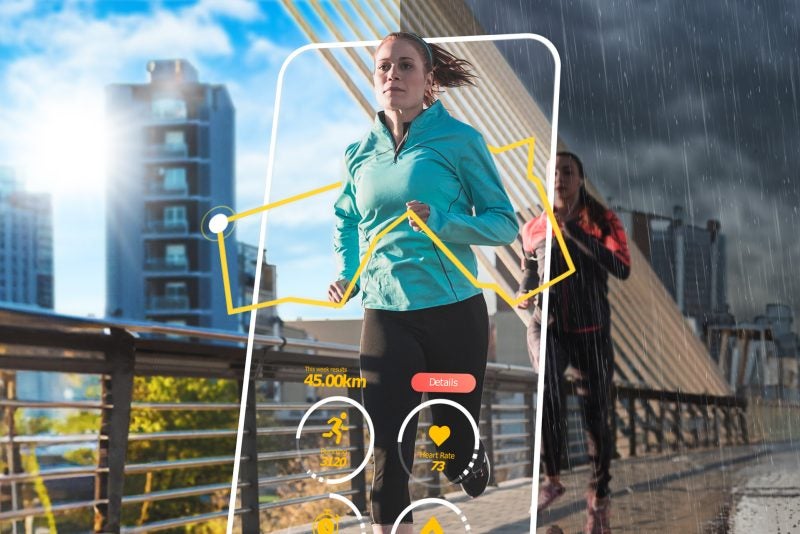

Holding court, is third year Computer Science PhD student, Lan Ziquan, sporting a fresh buzz cut and dressed in a pale blue long-sleeved shirt, dark trousers and coffee coloured, laced-up sneakers–not the typical Computing student look. To his right, is a large, 50-inch monitor, and to his left, by his feet, is a modest, red Parrot Bebop drone. He flicks the small Nexus 7 tablet in his hand, an aerial-view photo of an eagle soaring above a shoreline appears on the large display, and he begins.

“Would you like to take a photo like this?” he asks his audience. “Or, like this…” he continued, flicking another scenic aerial image, of a grand old villa overlooking a precipitous cliff, into view, “from high above…anytime, anywhere?” Venturing confidently, Lan declares, “In the near future, we are going to wear flying cameras on our wrists, just as commonly as having smartphones in our pockets. You can just remove it, toss it into the air, and it flies and takes photos for you. Today we are taking the first step to tackle the greatest challenges to help people to use this flying camera naturally and seamlessly.”

Watching intently from opposite ends of the demonstration area, are Lan’s research partner, final year Computer Engineering student Mohit Shridhar, and their supervisor, Professor David Hsu, an expert in robotics and artificial intelligence. Lan, Shridhar, Hsu, together with Associate Professor Zhao Shendong who specialises in human-computer interaction, had developed a new and more intuitive way of managing drone photography that will make photography with almost effortless, even for novices.

“Imagine how we interact with [digital] images today. We use very intuitive gestures like pinch-zoom and swipe-panning to manipulate this still image,” Lan says, while demonstrating those gestures on the tablet. “Imagine if you could ‘manipulate a live image’ while you are framing and composing a shot.”

Taking a photo using a camera on a drone is obviously different and requires more manoeuvring than using a camera with your hands and limbs. If you are holding the camera, and you want a different angle or view, you would simply adjust your hands, arms and/or body until you see the composition you want on your display. Having your camera perched on a drone however, adds a layer of complication to the process. Not only would you have to fiddle with a controller and deal with the mechanics of piloting the drone on three axes (i.e. forwards-backwards, left-right, up-down), but you would also have to imagine the drone’s point of view and fly it to a spot up in the air where you think you can capture the shot you want.

With Lan’s new system, first the drone autonomously takes several exploratory shots of the object you want to capture, from various angles. You are shown a gallery of these images on your tablet, and you pick one that best approximates the scene you hope to capture. The drone then flies back to the position that affords the view you selected.

Then, you can adjust the composition of image you want to capture by intuitively manipulating the ‘live image’ feed on your tablet. For example, you can pinch-zoom in or out on your primary object (e.g. the Merlion), or even perform select and drag gestures on other surrounding objects (e.g. surrounding buildings) to tell the system where you want these objects located in your final shot. From these composition adjustments to the ‘live image’, the system is clever enough to autonomously reposition the drone in the air until it is able to capture the final shot that you want.

The system is called ‘XPose’, a portmanteau of ‘explore’ and ‘compose’, its two main features. For the President and Provost’s visit, XPose performs all its tricks flawlessly and they cap off the demonstration with a happy group selfie with their guests. Lan, Shridhar and Hsu all look visibly relieved when it is all over. No one tells their guests that that was actually the first time they managed to get through the entire demonstration without issue.

Lan grew up in Tianjin, China, and attended one of the top schools in the city, Yao Hua Middle School. He developed an interest in robotics early in high school, and had medalled at a few provincial and national robotics competitions by the time he was 14 years old. Shortly after, in mid-2006, one of his teachers, Mr. Zhang, told him about Singapore’s Ministry of Education’s Senior Middle 2 scholarship programme for outstanding students, and encouraged him to apply. He was successful, so he skipped his final year of high school and moved to Singapore in December 2006 to begin the then 20-month ‘bridging’ portion of the scholarship programme. But, he was still undecided on what he should pursue. “Because of my interest in robotics, I was considering Computer Science, Electrical Engineering and Mechanical Engineering,” Lan said.

“So I Googled ‘NUS Robotics’ and David’s profile was one of the first items to appear,” he laughed. “I emailed him asking what programme he would recommend, based on my interests in ‘the brain’, artificial intelligence, decision making and mechanics.” Hsu responded with a long email, detailing the core differences across the fields. “He said,” Lan recalled, “‘If you want to learn how a robot works, study computing. If you want to create a chip, study electrical engineering.’ I didn’t want to learn to build a robot–I wanted to learn how to programme it.”

It was decided. In August 2008, Lan joined NUS Computing to do a double degree programme in Computer Science and Applied Mathematics. When he participated in the Undergraduate Research Opportunities Programme (UROP) in his second year, he finally met Hsu, who was one of the course coordinators. “He was very strict,” Lan mused. Unsurprisingly, Hsu had no recollection of the email exchange that had figured so prominently in Lan’s route to pursuing a career in computer science.

Lan went on to work with Zhao for both his UROP and Final Year Project (FYP). He completed his undergraduate programme in 2013 and joined all other graduates before him in the age-old conundrum, ‘What next?’. “At the time, computer science graduates were commanding very high starting salaries–between $6,000 to $8,000 at prominent tech companies. But, I wasn’t interested in being told what to do,” Lan said, matter-of-factly. So, with the encouragement of his friend, who was also contemplating graduate studies, he applied for and was accepted into the NUS Graduate School for Integrated Sciences (NGS), where students pursue interdisciplinary education and research, and began his PhD programme in August 2013. There, once again, Lan’s affinity for robotics led him to Hsu, who was also part of the panel of supervisors for the NGS programme.

“David didn’t let me join his lab right away though,” said Lan wryly. “He had a project in mind for me, but before he let me work on it he asked, ‘Do you know anything about drones?’”

“No.”

“Do you know SLAM (simultaneous localisation and mapping algorithm)?”

“No.”

“Do you know the Kalman filter?”

“No.”

“Do you know the PID controller (proportional–integral–derivative controller algorithm)?”

“No.”

“Do you know ROS (robot operating system)?”

“No.”

Prior to working with Lan, Hsu had been working on self-driving cars. When it came time to produce videos of his autonomous car in action, he found it hellish having to chase the car around with a camera. When contemplating different strategies to best manage this problem, it hit him: “I need an autonomous flying camera to tail my autonomous car!” And so, the need to film a self-driving car birthed the idea for a self-flying, self-exploring, and also-good-for-selfies camera.

To start, Hsu gave Lan a paper: ‘Camera-Based Navigation of a Low-Cost Quadrocopter’, by Engel, Sturm and Cremers (2012). At the time, even though the algorithm used in this study, where a drone successfully followed a predefined path in the air, did not quite meet their requirements, the study’s findings were the most promising and most relevant to what Lan and Hsu were trying to achieve. “Localisation, which is knowing where it is in any given environment, is an inherent difficulty for most autonomous robots,” explained Lan. “But, we figured that if could get a least part of our idea to work using their algorithm, then at least in theory we knew that what we were trying to do was possible.” So, Lan decided to try to employ similar techniques to those used in the Engel et al. (2012) paper, to develop a photo-taking system for drones.

Lan had zero background in the area, so he spent weeks learning everything–i.e. all the basics about drone systems–from scratch. Eventually, after two months of hard work and consultations with Hsu on concepts he struggled with, Lan managed to replicate the Engel et al. (2012) study. “Replicating the study proved to David that I understood the concepts and could use their study as a starting point or a sandbox for our idea,” he said.

That did the trick–in October 2013, Hsu took Lan in. “Ziquan showed that he was mature, independent, hardworking and willing to try out new ideas. People with these qualities generally make up for whatever lack in knowledge over time,” observed Hsu. By February, they knew that they wanted their system to be able to take multiple exploratory shots of the object of interest. Lan spent the first six months building a desktop prototype in an open source simulator. When Lan showed his work to Hsu and Zhao, Zhao told Lan to build a mobile app for his system as soon as possible–they needed to properly compare the performance and user experience of drone photography with their system, against drone piloting and photo-taking with drones’ native apps, which are all built for mobile devices.

Consequently, Lan spent the next six months learning how to build mobile applications, and then actually building an Android app interface for his system. The following year was a blur of trying out anything he could think of to get the drone to reliably orbit an object. None of the methods he tried, including vision-based tracking, motion capture, and various SLAM algorithms, which allow a system to figure out where it is located in a given environment while simultaneously drawing a map of that environment, worked. In the latter half of 2015, Zhao thought that the system needed something else to really make it shine, so they began experimenting with directly manipulating the ‘live image’. Thus, the composition feature, which would turn out to be the easiest to demonstrate and an audience favourite, was added to the system. Lan remembers the first two years as a period of extensive trial and error, where he doggedly tried many different ideas and techniques that ended up failing–seemingly operating by the popular ‘fail fast, fail often’ mantra oft spouted by many in the tech industry.

After months of trying many different ideas, it dawned on Lan that they did not need the drone to really understand its environment. “Tracking three-dimensional objects in a dynamic environment with a flying camera is technically very challenging. But if we simply want to take a photo, all we really care about is a static scene. So let’s forget about tracking moving objects and track space instead,” explained Lan. So, instead of getting the system to map and track objects in the scene it was trying to capture, he switched tacks and programmed it to track the location, i.e. the X, Y and Z coordinates, of the objects in the scene rather than the objects themselves.

At about the same time, Shridhar, who was in the fourth year of his Computer Engineering programme, was completing his NUS Overseas Colleges (NOC) stint in Silicon Valley, California, USA. As part of the NOC programme, he was interning as a computer vision engineer at a start-up dealing with augmented reality, and happened to be working with SLAM. He knew that when he returned to NUS, he would be bored taking only three modules in his final year, and he had always wanted the opportunity to do some work in robotics. So, like in a comic book where the heroes share quaint, parallel ‘origin stories’, he too took to Google with two key words: NUS Robotics.

Like Lan, Shridhar found Hsu and emailed him explaining that he would like to work on a robotics project for a year. It took some persistence to get the attention of the preoccupied professor, but it paid off. After about a month and a couple of follow up emails, in January 2016, Hsu introduced Shridhar to Lan, and the pair began working together to try to figure out how to get the drone to fly reliably and understand where it is in the environment (i.e. localisation)–the problem that had been plaguing Lan.

Having just returned from Silicon Valley, Shridhar suggested they try a promising new SLAM algorithm in a paper he had heard about–the 2015 IEEE Transactions on Robotics Best Paper, ‘ORB-SLAM: a Versatile and Accurate Monocular SLAM System’ by Mur-Artal, Montiel and Tardos (2015). Initially, there was no evidence that the ORB-SLAM algorithm would work with drones, but later, they found a workshop paper describing the successful use of ORB-SLAM with the same Parrot Bebop drone they were using. With that verification, they began integrating the algorithm into their system.

For the next four months, they tested their modifications with ORB-SLAM in a simulator and managed to produce good results. By May 2016, they were satisfied with the results enough to begin testing their system on a real drone. The following three months were the most productive and destructive of the project. The drone drifted a lot because of the poor quality of the images the built-in camera produced, which, for a drone, is akin to having very bad eyesight. It could not even see its environment, much less tell where it was in its environment. So it performed the way you would if you were asked to pilot a drone blindfolded. Upgrading the camera was not an option because most drones’ cameras are built-in to fix the drones’ payload (i.e. weight), which affects flight capabilities. Besides, they wanted their system to work under the most basic conditions.

Predictably, experimenting with a near-blind autonomous drone did not pass without incident. Once, Lan was testing the drone alone in the lab when it suddenly ascended sharply, hit the ceiling and then crash landed on the floor. Immediately the drone’s battery started smoking, so Lan rushed over, popped off the scorching battery with his bare hands, dropped it on the floor, wrenched open the lab door, and kicked the battery across the floor, out of the smoke-filled lab. Campus security showed up and he had to explain what happened. After the fire died and the battery cooled, Lan examined it and found a pushpin stuck in the battery, which meant that the drone had impaled itself on a rogue pushpin in the lab’s ceiling when it randomly shot upwards. That drone was completely destroyed–one of only two drone casualties in the course of the project.

Their system still could not tell the drone where it was in its environment. The drones crashed every day for two months, breaking at least eight propellers in just that period. But in those months, they also ploughed through numerous technical papers that addressed problems that were similar to theirs, tested a slew of those studies’ proposed solutions, and moved on if the solution did not work.

Lan and Shridhar worked on the system’s problems in parallel. Shridhar, the younger of the two, worked methodically, addressed each problem logically and devised solutions that were based on sound science. Lan, on the other hand, preferred wrangling problems like a cowboy, implementing anything that he could think of, however wacky. “Mohit does things properly, scientifically. I’m more hack-y,” Lan admitted, “All I cared about was hacking through it and making it work. We could figure out how to explain it all afterwards.”

In the end though, it was Lan’s random ‘hacks’ that fixed the drifting problem. Counterintuitively, he decided to tweak the system’s parameters to reduce the drone’s location accuracy during the ‘explore’ function, where it had to take photos of the object of interest from five different angles. The drone stopped drifting. It no longer had to try to get to an exact location to take an exploratory photo–arriving within the vicinity was sufficient. The system was not perfect, but it was working.

“We showed David the solution and he was excited,” said Lan, grinning. Soon after, Lan, Shridhar and Hsu had the opportunity to present their work to NUS’ President and Provost, who were scheduled to visit to the School of Computing at the end of July 2016. Lan and Shridhar spent weeks preparing. They tested and selected an appropriate ‘object of interest’ and location, modified the system’s flying patterns, repeatedly tested the system, styled their presentation after TED talks and did at least five complete dry runs. Lan even got a bad haircut the day before the event.

Fortunately, at actual showtime, XPose behaved perfectly. Since then, they have demonstrated their drone to several other prominent visitors, including the Minister for Education, Ong Ye Kung, and the Chief Technology Officer of Panasonic. “There were multiple scary moments when my heart sunk and I expected the system to crash,” Hsu confessed, “But every time, the system recovered and survived very challenging situations.”

“A flying camera is a human-centred autonomous system. Robot autonomy enables seamless, natural interaction between humans and the autonomous robot. Throughout the project, we integrated interaction design and system design. The interdisciplinary collaboration between Zhao Shendong, a leading expert on HCI, and us gave this project unique strengths. We learned a lot from each other,” Hsu added.

It was time for them to publish their work, but the first attempt did not go so well. They wrote and submitted a paper to the Special Interest Group on Computer-Human Interaction (SIGCHI) conference, but received notice of its rejection in December 2016. They completely forgot the old adage, ‘play to your audience’. Their paper had no story and they had neglected to highlight the strengths of their system in terms of user interaction, which was what their audience cared about. By then, Shridhar had completed his computer engineering bachelor’s degree programme.

Lan and Shridhar regrouped in January 2017, re-wrote their paper, produced a new video of their system, solicited feedback from their lab peers, refined both the paper and the video per their peers’ comments, and submitted their work to the Robotics: Science & Systems (RSS) conference. Their efforts were not in vain–their revised paper, ‘XPose: Reinventing User Interaction with Flying Cameras’ was rated highly and accepted in April 2017. “The RSS reviewers appreciated the technical challenges in our work,” Shridhar said. They were thrilled–it was Shridhar’s first, and Lan’s first first-author publication. For Lan, having the paper accepted at RSS was enough.

In July 2017, at the conference at the Massachusetts Institute of Technology (MIT), in Cambridge, Massachusetts, USA, Lan was fortunate to be among the first presenters on Day 1. His five-minute presentation went well and Shridhar, who was in the crowd, noted that the audience seemed impressed, particularly during the ‘composition’ portion of the demonstration. They also had to present their work at the poster session that evening, so Hsu suggested that they push themselves to show more scenarios of what XPose is capable of and their technical framework, content that they had not been able to squeeze into the earlier presentation. And so Shridhar decided to put together a new video, while sitting in the audience as the other presentations went on throughout the day. Lan and Shridhar also tried to think of any tricky questions that might come up during the poster session, and prepared responses for anything they could anticipate.

Come poster time, they gave it their all and really made the most of the opportunity to show what XPose could do. Researchers, scientists and engineers from universities, labs and robotics companies around the world visited them and appeared interested in and impressed with the system’s real world applications. Later in the evening, a large group of academics came by and asked sophisticated technical and design questions that, thanks to their preparation, Lan and Shridhar managed to field comfortably. After that, they spent the next two days relaxing and enjoying the rest of the conference.

In the afternoon of Day 3, Lan and Shridhar found seats near the back of the Kresge Auditorium and waited as all the other conference attendees streamed in for the conference awards presentation. The first award presented was for the Best Student Paper. “The ‘Best Student Paper’ and ‘Best Paper’ finalists were announced a month prior, and we weren’t shortlisted so we weren’t expecting anything,” said Shridhar. “When we saw the Best Student Paper award presented first, for some reason we assumed there was no Best Systems Paper this year.” The Best Systems Paper Award in Memory of Seth Teller ‘is given to outstanding systems papers presented at the RSS conference. The awards committee determines each year if a paper of sufficient quality is among the accepted papers and may decide not to give the award.’

Before they realised what was happening, the next award category was announced and their names and the title of their paper were flashed across the giant screen on stage, the last of the three finalists for the Best Systems Paper award. The people around them congratulated them but they were too surprised to react so, in a daze, they simply made their way from the back of the auditorium to join the other two teams already on stage.

As soon as they arrived on stage and stood next to their peers, they were announced the winners of the 2017 RSS Best Systems Paper Award. They were completely stunned. There is a photo of Lan, agape, and Shridhar, inscrutable, staring at each other in shock. “Everything, from finding out we were finalists, to being announced the winner, happened in less than 3 minutes,” recalled Shridhar. “There was no drumroll, no time wasted. You have to remember, these are robotics scientists. There’s no penchant for drama,” he quipped, with his trademark easy chuckle.

“I looked for David in the audience, and spotted him at the back, looking very pleased”, said Lan. “We worked hard on this for three years and did not publish any results in between–it would not have been good enough. A great result is usually not a bunch of increments stacked together,” said Hsu, reflecting on their work. “This is the result of three years of exploration, creativity, and dedicated effort. I am truly proud of what Ziquan and Mohit have achieved.”

That night, they all celebrated their achievement with a lobster dinner. Wasting no time, a previously described hallmark of a robotics scientist, before the first course arrived, Hsu asked Lan, “So, what’s next? When are you going to get another best paper award?”

by Toh Tien-Yi

Paper:

XPose: Reinventing User Interaction with Flying Cameras