Harnessing the Power of Music AI to Heal Stroke Patients

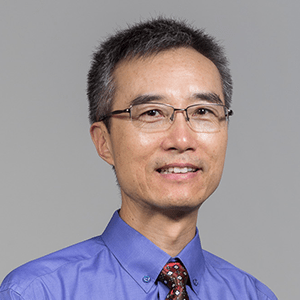

Many people, including Wang Ye, can attest to the healing power of music. On bad days, it helps us relieve stress, feel calmer, and boost our moods. “When I have difficulties in life, I feel that music really helps me to relax and to relieve something,” says Wang, an associate professor at the School of Computing.

Given its therapeutic effects, music is increasingly being used as a form of medicine — piped into neonatal intensive care units and oncology waiting rooms, and administered as adjunct therapy to treat autism, asthma, depression, anxiety, and a range of brain disorders including Parkinson’s disease, epilepsy, and stroke.

Wang, who studies how sound and music computing can aid human health, now wants to bring such music intervention to stroke patients in Singapore. He’s particularly interested in how singing can accelerate the recovery process, and has invented an AI system, called CocoLyricist, to help patients write their own songs.

In August, Wang, together with nurse clinician scientist Catherine Dong from the NUS Yong Loo Lin School of Medicine, began a two-year trial in a small group of stroke patients to study the use of CocoLyricist as a method to retrain the brain.

Millions of brain cells are destroyed when a stroke occurs. “You can’t repair these neurons but you can use technology like mine to help build more connections between the ones that remain,” he says. “When patients are able to create their own songs, which tell their own story, it’s especially meaningful and helpful for them to connect with themselves.”

Creating singable lyrics

The idea for CocoLyricist was born during the Covid pandemic, when Wang realised how difficult it was for stroke patients to physically travel from their home to the hospital to receive music therapy. “Also, there’s the problem of traditional music therapy being very manpower intensive — one music therapist can only handle one or a few patients at a time,” he says.

Instead, Wang believed he and his team could create “technology that could be accessible to anybody with a laptop, mobile phone, tablet, or similar device.” Moreover, CocoLyricist — which alludes to the notion of “co-creation or collaboration between a machine and the human being” — could tap the powers of AI for singing and songwriting.

Wang’s new system stems from a series of work his lab conducted over the past three years, which focused on how AI can be used to help generate lyrics, which in turn can aid language learning. “AI-Lyricist was more exploration-based research. But with CocoLyricist, we have a more concrete application,” he says. “So it’s a nice continuation from more theory-oriented to more practical-oriented research.”

“All the technologies we developed for AI-Lyricist will somehow be integrated into the new system,” he adds.

These include the XAI-Lyricist, a system that helps users “generate meaningful, sensible, and usable lyrics,” describes Wang. Language models, like those which power ChatGPT, make the task of lyrics composition faster and easier than ever before. Despite this impressive performance, such models face a key problem: they output lyrics in plain text without any musical notations, making it difficult for composers and singers to understand whether the generated lyrics can be sung harmoniously with melodies.

But XAI-Lyricist overcomes this hiccup by using musical prosody to guide language models in generating singable lyrics. Moreover, it provides explanations to explain how singable, or not, a set of lyrics are. Prosody refers to the patterns of rhythm and intonation, focusing on how melody complements text — for instance by ensuring that the musical elements reflect the mood of the lyrics through the emphasis of important words or syllables, aligning strong and weak beat notes, and so on.

In testing, XAI-Lyricist was found to improve singability of language model-generated lyrics, with 14 test subjects affirming that the explanations provided helped them to interpret lyrical singability faster than reading plain text lyrics. Earlier this year, the team — led by Wang’s PhD student Liang Qihao — presented their findings at the Special Track on Human-Centred Artificial Intelligence of the International Joint Conferences on Artificial Intelligence 2024 (IJCAI 2024), a highly selective track with an acceptance rate of only 5%.

XAI-Lyricist can also be applied to learning new languages, says Wang. “A language learner can choose whatever song they like, but the particular lyrics may not be suitable because of the vocabulary and so on.” For instance, the words used can’t be too complex if the user is a young child. Instead, what XAI-Lyricist does is to help put some constraints on lyrics generation “so that we can customise the generation to match the application scenario,” says Wang. “Similarly, for CocoLyricist, we can use XAI to consider how the lyrics generated can reflect a patient’s life story.”

High-quality singing and songs that make sense

Another system Wang plans to integrate into CocoLyricist is one that helps strengthen the coherence and musicality of lyrics generated. By leveraging keywords and integrating pitch, alongside other elements, into music encoding, the KeYric system demonstrated a 19% improvement in lyric quality over existing models.

“The idea was to generate actual songs that people can follow” and that make sense on a word, sentence, and full-text level, explains Wang, whose postdoctoral research fellow Ma Xichu spearheaded the work.

Additionally, Wang will incorporate singing voice synthesis (SVS) — a type of technology that can generate high-quality singing voices from musical scores, lyrics, and other input data, without needing a human singer — into the new system to treat stroke patients. SVS is useful because “assuming we have the music and we have the lyrics, how do we generate the actual song for people to hear?” he explains.

“A song can serve as an example for patients to mimic, because many of them are not musically trained. So it’s much easier if they have something to listen to, rather than just looking at music notation and lyrics, and trying to sing from that,” he says.

Existing SVS systems, however, struggle with the precise control of singing techniques, which in turn translates to singing voices that lack expression. To bridge this gap between artificial and human singers, Wang and his PhD student Zhao Junchuan, created SinTechSVS — the first SVS system capable of controlling singing techniques.

SinTechSVS employs machine learning to efficiently annotate singing techniques. Experiments demonstrated it significantly improved both quality and expressiveness of synthesised vocal performances compared with state-of-the-art SVS systems.

All three of Wang’s inventions, alongside the new CocoLyricist, are examples of the good we can reap from AI. “Nowadays, everyone is talking about AI and its applications. Of course, technology can do harm, but it can also do a lot of good things,” he says. “What we are doing here is basically using AI for human health and societal good.”