29 November 2022 — Can Artificial Intelligence replace musicians? Probably not, but they can definitely help musicians unlock their creativity. The Sound & Music Computing for Human Health & Potential (SMC4HHP) seminar and concert held at Innovation 4.0 on Saturday, 12 November 2022 proved just that.

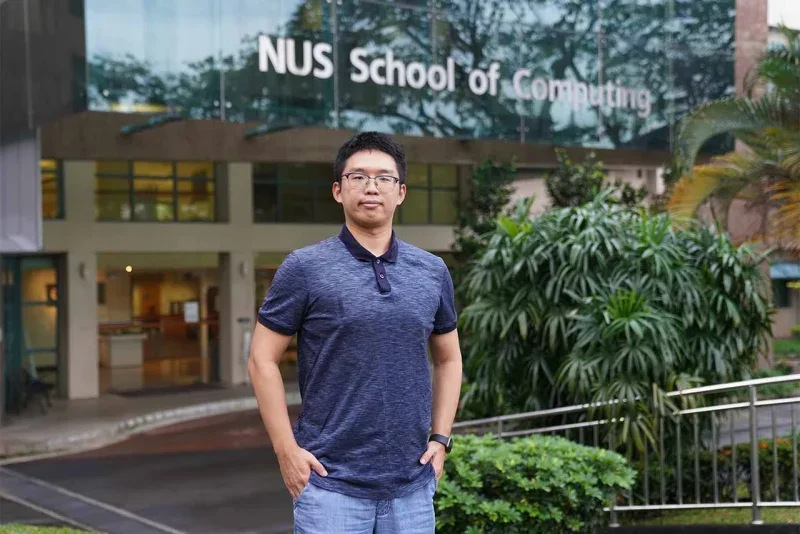

This was the second live concert hosted by the Sound & Music Computing (SMC) Lab to showcase the talent and creativity of NUS Computing students. Its performers included lab members and students of CS4347/CS5647 Sound and Music Computing module taught by Associate Professor Wang Ye from the Department of Computer Science.

SMC lab was established by Prof Wang Ye when he joined NUS School of Computing in 2002. Although sound and music computing was already an active research field overseas then, the lab was the first of its kind in Singapore. A recently published paper by the lab, “MM-ALT: A Multimodal Automatic Lyric Transcription System,” also received the top-rated paper award at ACM Multimedia in 2022.

Sound & Music Computing Lab aims to educate and promote student well-being

One of the SMC4HHP concert’s main objectives is to educate. The concert was opened by Dr. Suzy Styles from Nanyang Technological University (NTU) who delivered a seminar on “The neural basis of creative audition: How human brains learn to generate speech and music,” intriguing the audience on the neural wonders of the human brain. Secondly, it aims to promote student well-being, especially in the age of the pandemic which heightened mental health problems among students. Additionally, students had the opportunity to apply what they learned in their research, and showcase their musical talents and the lab’s latest breakthrough in music AI.

“I hoped that the showcases of our state-of-the art-research outcomes could inspire our junior students to leverage on the foundational knowledge they have learned in CS4347/CS5647 to make their own unique contributions in the future,” Prof Wang Ye said.

Composing music and lyrics using AI

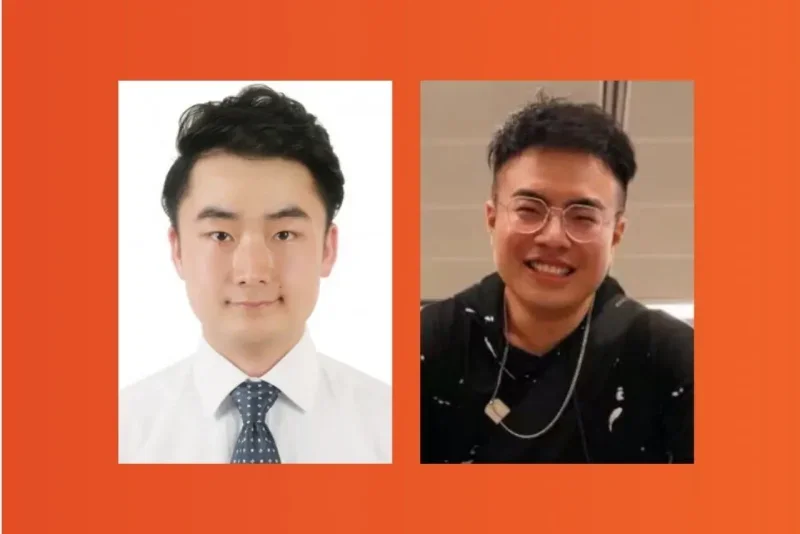

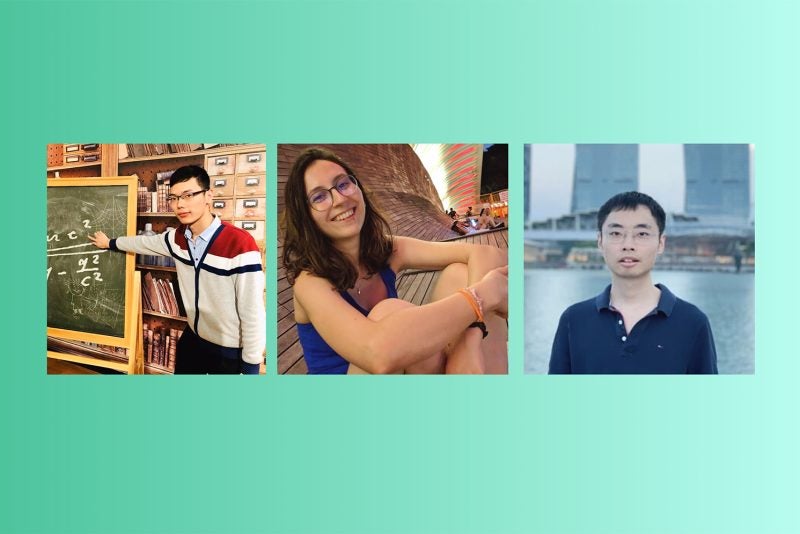

At the concert, students entertained the audience with familiar classics from artists such as Queen, Teresa Teng, John Lennon, and many more. Among these, three performances stood out. These three performances include an accompaniment generation to Auld Lang Syne by Zhao Jingwei and Wang Yixin, an original computer music generation piece by Shawn Ng, and AI-generated lyrics to Danny Boy by Stan Ma and Justin Tzuriel.

To find a fitting accompaniment to play Auld Lang Syne, Jingwei who is a PhD student at SMC Lab uses his AI model called AccoMontage. It is a hybrid system that uses rule-based search to look for suitable melodies from a music commons and deep learning to transpose and re-harmonise the accompaniment melody onto Auld Lang Syne.

Another interesting demonstration was The Persistence of Memory. To produce this original musical piece, Shawn who is an undergraduate student of the CS4347/CS5647 module uses a modular synthesiser which is a customisable electronic instrument that can generate and manipulate sounds in multiple ways. “The modular synthesiser I used comprises modules that use different synthesis techniques for sound generation, including physical modelling and frequency modulation (FM) synthesis, as well as various digital signal processing (DSP) techniques to manipulate and warp these sounds, some of these concepts being directly related to what is taught in the CS4347 Sound and Music Computing module,” he explained.

Besides generating tunes, AI can write lyrics too! Stan who is a PhD candidate at SMC Lab presented an AI-lyricist which generated lyrics to Danny Boy, a famous Irish folk song. However, as the original lyrics require contextual knowledge which may not be easily understood by beginner English learners, the AI-lyricist generated new lyrics more suitable for language learning. Stan also shared that the AI-lyricist supports SLIONS, an application for students to learn languages through singing songs.

The students performed many more tunes and the full list can be found on SMC Lab’s website.