24 Aug 2023 — NUS Computing faculty and students have excelled at the recent 61st Annual Meeting of the Association for Computational Linguistics (ACL) which was held in July in Toronto, Canada.

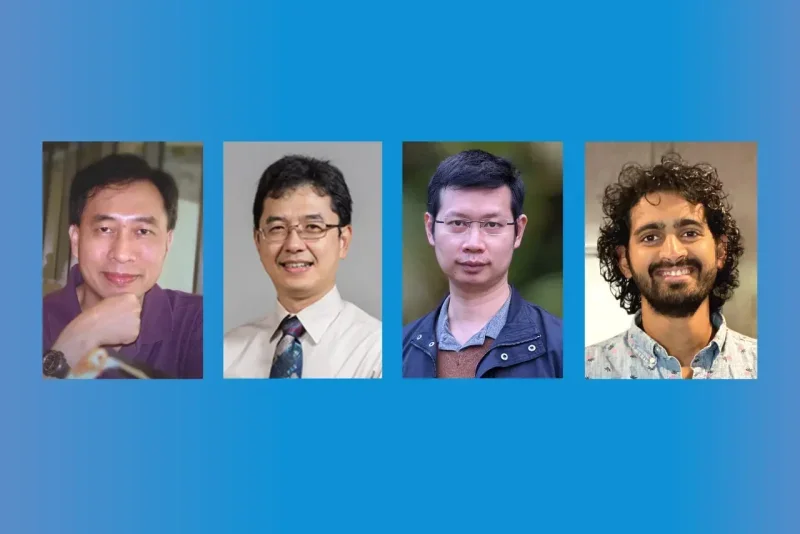

The paper “CAME: Confidence-guided Adaptive Memory Efficient Optimisation“, which looks at training processes to increase the efficiency of large AI models like GPT, won the Outstanding Paper Award. It was authored by Yang Luo, Zheng Zangwei, Zhuo Jiang and NUS Young Presidential Professor Yang You together with Xin Jian and Xiaozhe Ren from Huawei. Both Yang Lou and Zhuo Jiang are Master students while Zangwei is a PhD student.

Training deep learning models consumes a significant amount of memory. The consumption becomes more severe with increasing number of parameters in large language models. With the new Confidence-guided Adaptive Memory Efficient or CAME optimiser, designed by the team, memory usage can be reduced without compromising on performance. CAME incorporates guided updates to adjust the update size based on the model’s confidence, and applies non-negative matrix factorisation to the introduced confidence matrix, thus effectively reducing additional memory overhead. The CAME optimiser can also significantly reduce the costs relating to training large language models.

“Our team’s pursuit was to bridge the gap between efficiency and performance,” said Zangwei. “With the CAME optimiser, we have not only advanced the frontiers of AI training but also paved the way for more sustainable and cost-effective implementations.”

At the same event, another paper titled “Tell2Design: A Dataset for Language-Guided Floor Plan Generation” was presented with the Area Chair Award. A collaboration between NUS and the Singapore University of Technology and Design (SUTD), the team comprises Dr Yang Zhou, Dr Mohammed Haroon Dupty and Professor Lee Wee Sun from NUS Computing; and Dr Sicong Leng, Associate Professor Sam Conrad Joyce and Associate Professor Lu Wei from SUTD.

The research involves floor plan design generation directly from natural language descriptions. The team introduced a novel dataset Tell2Design (T2D), which contains more than 80k floor plan designs associated with natural language instructions. They also proposed a Sequence-to Sequence model that can serve as a strong baseline for future research. The team believes that language-guided design generation will improve productivity for both the designer and architect alike.

“Generating designs from natural language provides different challenges compared to current popular generative AI tasks of generating artistic or realistic content. Hence this is an interesting line of research to pursue,” said Prof Lee.